Advanced Knowledge Base: AI Settings

Introduction

Tiledesk's AI settings provide powerful tools for fine-tuning the behavior and performance of your chatbot. These settings include maximum number of tokens, temperature, chunks, system context, and prompt. This tutorial will explain each of these settings and how they impact your Knowledge Base.

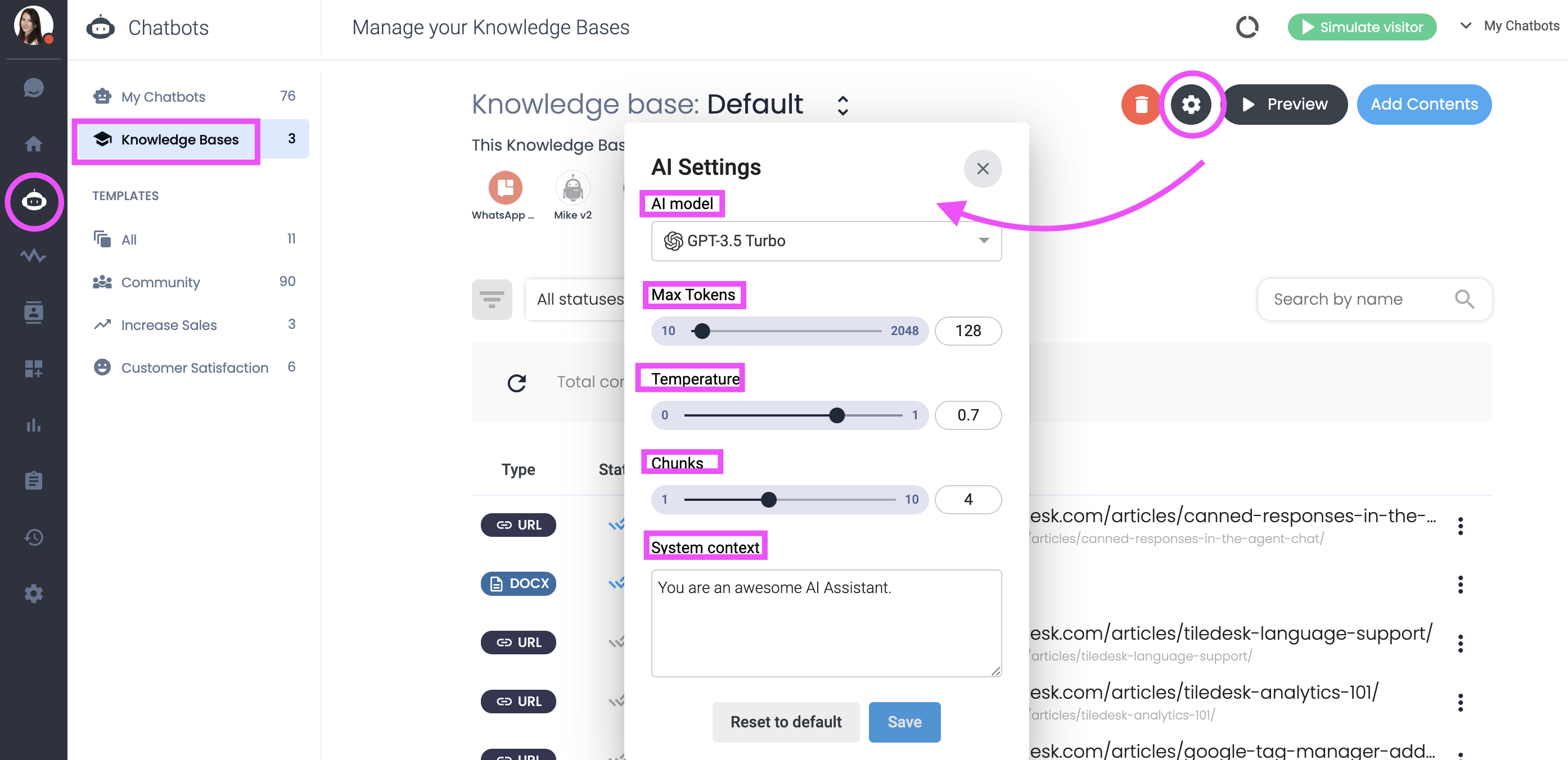

Access AI Settings

1. Once logged in, click on the Bots section in the main navigation menu.

2. Select the bot you want to configure.

3. Go to the Knowledge Bases tab.

4. Click on the gears icon to access and configure the AI settings.

AI Settings Explained

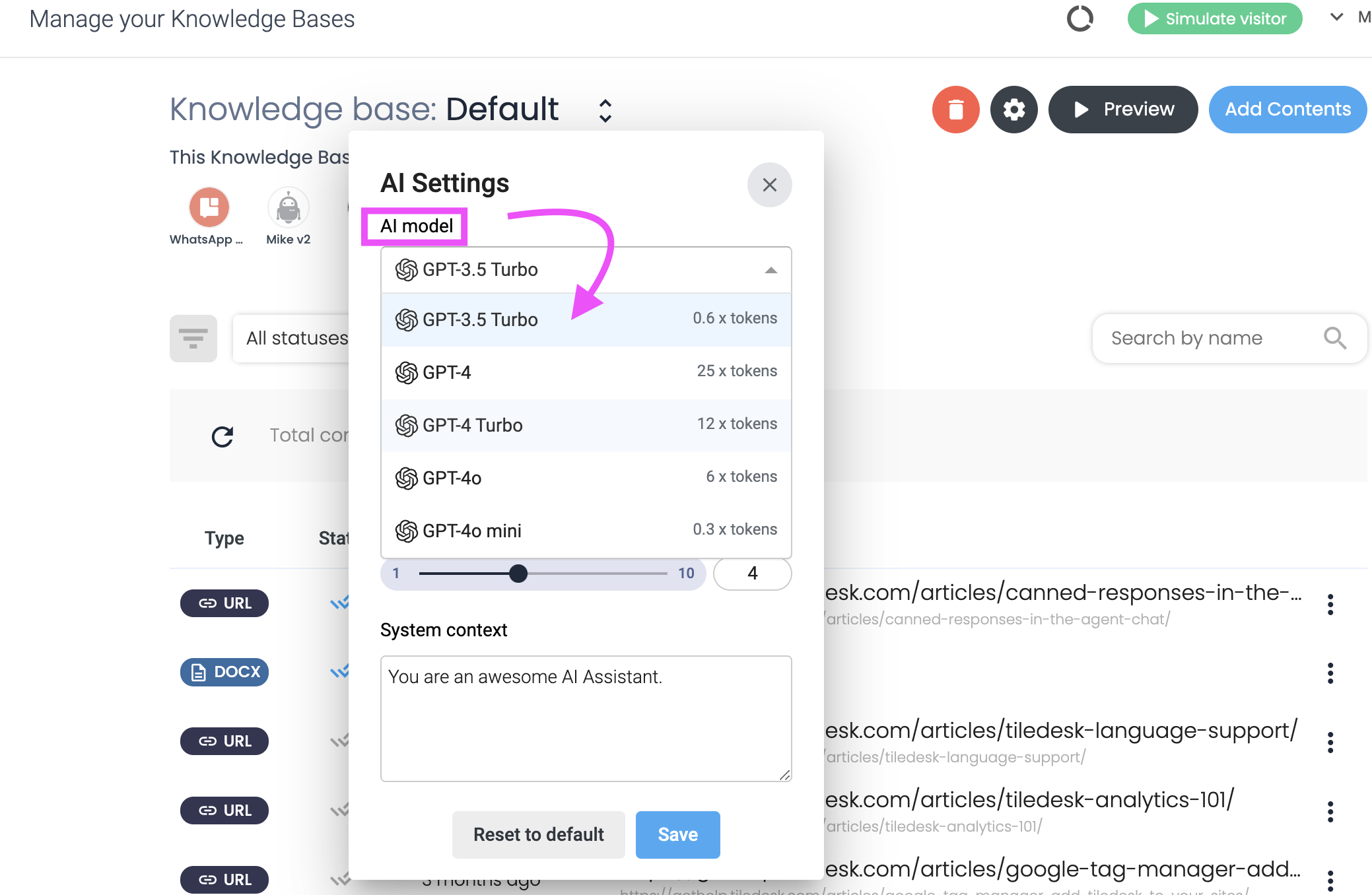

1. AI Models

You can choose the following AI models from the drop-down menu: GPT-3.5 Turbo, GPT-4, GPT-4 Turbo, GPT-4o, and GPT-4o Mini. As you can see from the picture below, each model consumes a different amount of tokens.

Differences Among OpenAI Models

1. GPT-3.5 Turbo

- Definition: An optimized version of GPT-3, designed for improved performance and efficiency.

- Token Consumption: Lower cost per token compared to GPT-3. Primarily designed to be cost-effective while maintaining high performance.

2. GPT-4

- Definition: The latest and most advanced version of the GPT series, offering superior language understanding and generation capabilities.

- Token Consumption: Higher cost per token due to its advanced capabilities and enhanced performance.

3. GPT-4 Turbo

- Definition: An optimized version of GPT-4, designed to be faster and more cost-efficient while retaining most of the advanced features of GPT-4.

- Token Consumption: Lower cost per token compared to standard GPT-4, with improved efficiency.

4. GPT-4o

- Definition: A specialized version of GPT-4, optimized for certain tasks or industries, offering targeted performance improvements.

- Token Consumption: Typically higher than GPT-4 Turbo but lower than standard GPT-4, due to specific optimizations.

5. GPT-4o Mini

- Definition: A smaller, more efficient variant of GPT-4o, designed for applications requiring less computational power while still benefiting from targeted optimizations.

- Token Consumption: Lower cost per token compared to GPT-4o, aimed at providing a balance between performance and resource usage.

| Model | Definition | Token Consumption |

|---|---|---|

| GPT-3.5 Turbo | Optimized version of GPT-3 | Lower cost, high efficiency |

| GPT-4 | Latest, most advanced GPT model | Higher cost, best performance |

| GPT-4 Turbo | Optimized for speed and cost-efficiency | Lower cost than GPT-4, faster |

| GPT-4o | Specialized version of GPT-4 for targeted tasks | Higher than GPT-4 Turbo, lower than GPT-4 |

| GPT-4o Mini | Smaller, efficient variant of GPT-4o | Lower cost than GPT-4o, efficient balance |

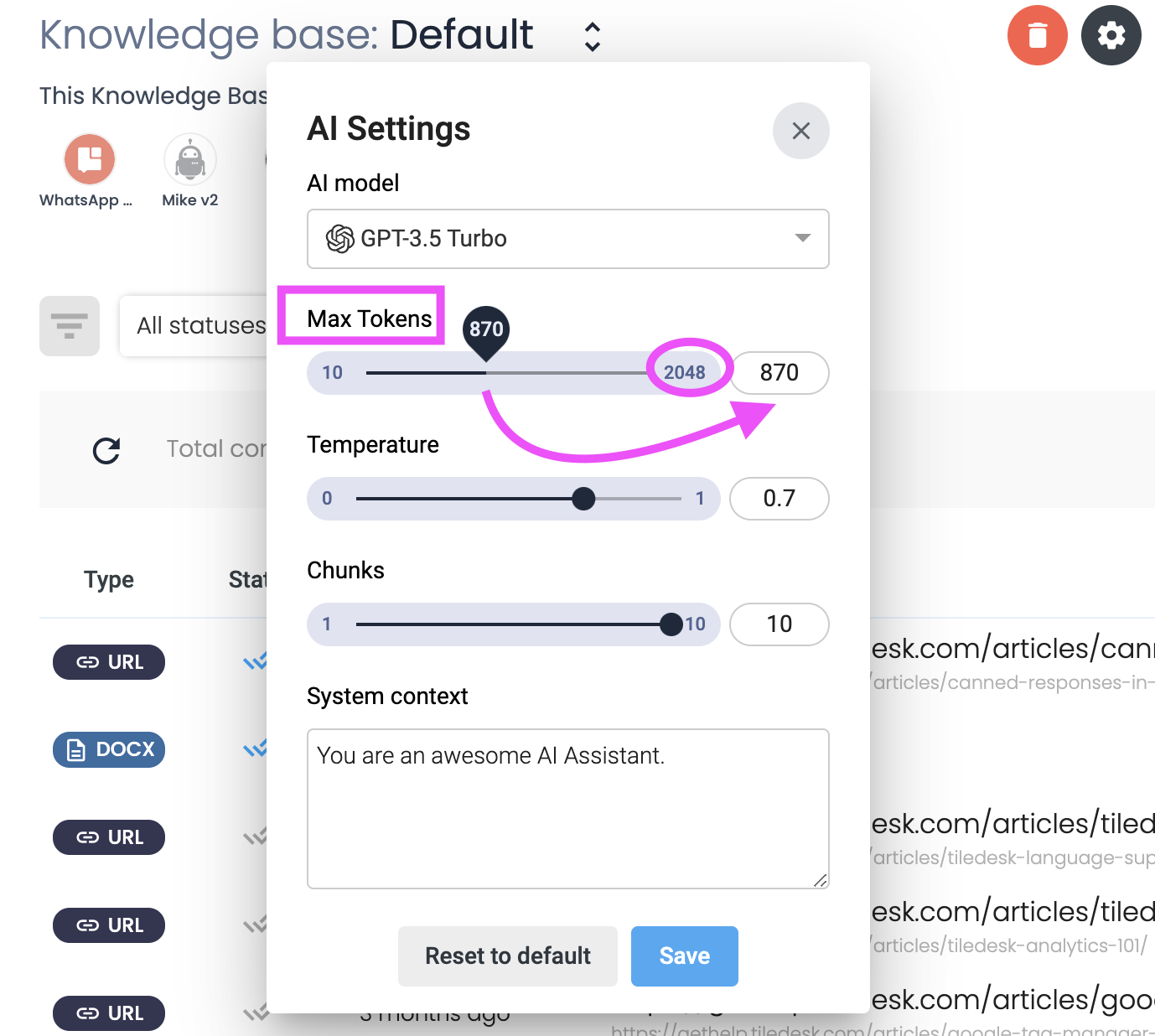

2. Maximum Number of Tokens

- Definition: Tokens are the pieces of words that the AI uses to process and generate responses. The maximum number of tokens setting controls the length of the responses.

- Impact: A higher token limit allows for longer, more detailed responses. A lower limit restricts the length, making responses shorter and potentially more concise.

- How to Configure:

- Locate the Maximum Number of Tokens setting in the AI Settings.

- Enter the desired number of tokens, up to a maximum of 2048 (e.g., 100, 200, 500).

3. Temperature

- Definition: Temperature controls the randomness of the AI's responses. A lower temperature makes the responses more deterministic and focused, while a higher temperature makes them more creative and varied.

- Impact: Adjusting the temperature helps balance between consistency and creativity in responses.

- How to Configure:

- Locate the Temperature setting.

- Set the temperature value (e.g., 0.2 for more focused responses, 0.8 for more creative responses).

4. Chunks

- Definition: Chunks are portions of content that the AI uses to create responses. Breaking content into chunks helps the AI process information more efficiently.

- Impact: Proper chunking ensures the AI can handle large documents or datasets without performance issues.

- How to Configure:

- Locate the Chunks setting.

- Define the size of the chunks, from 1 to 10.

5. System Context

- Definition: System context provides the AI with background information or guidelines that influence its behavior and responses. It sets the overall tone and direction for the AI.

- Impact: A well-defined system context helps the AI understand its role and the type of responses it should generate.

- How to Configure:

- Locate the System Context setting.

- Enter the context information, such as "You are a helpful customer support assistant."

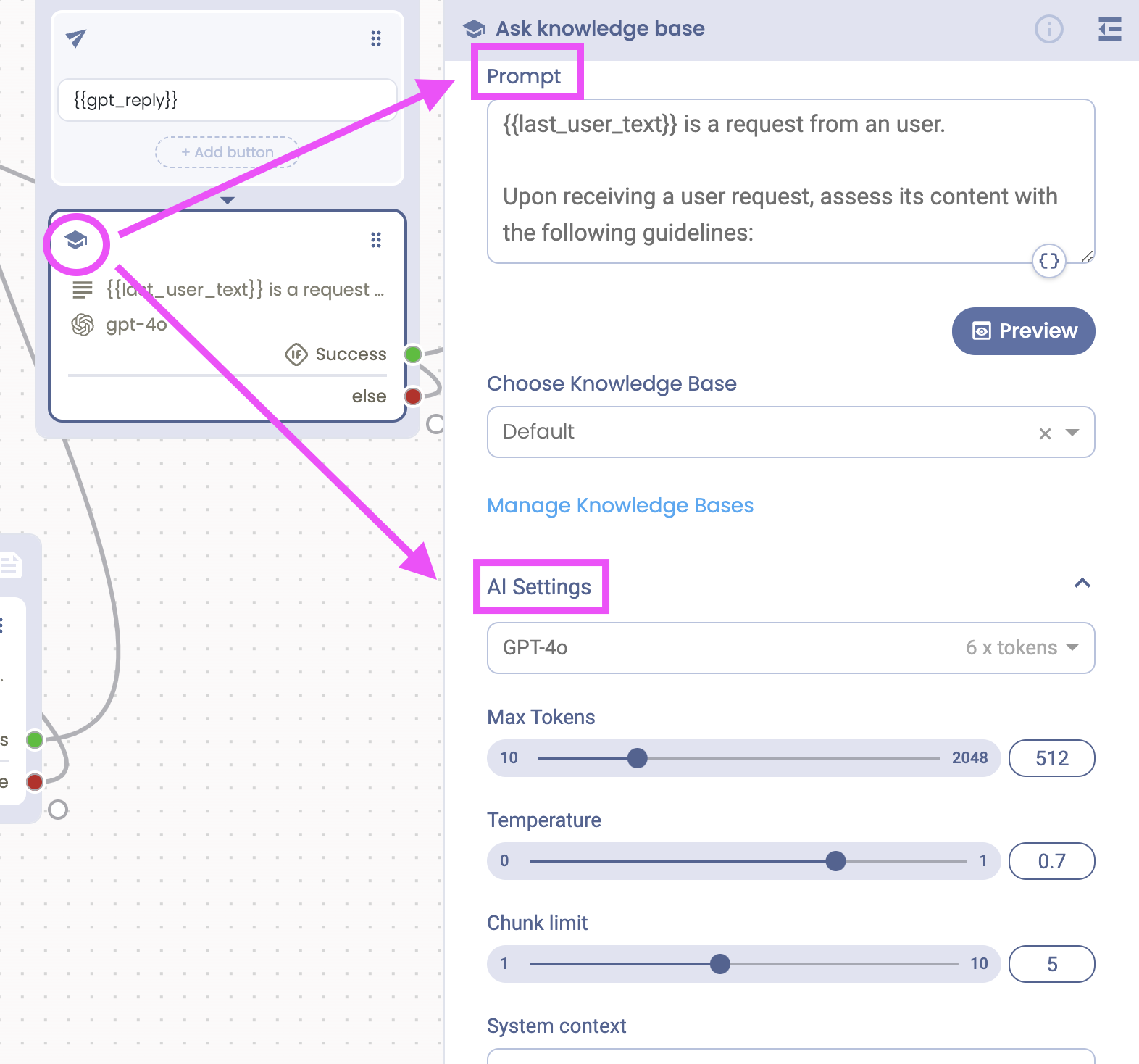

Bot Fine-tuning & Prompt

Now that you've set your AI preferences, you can go even further and start drafting your prompt within the Chatbot flow. Please note that every Bot you create can have different AI settings if you so prefer.

- Definition: The prompt is the initial instruction provided to the AI, guiding it on how to generate responses based on user input.

- Impact: The prompt shapes the AI's initial understanding and response generation.

- How to Configure:

- Go to the Design Studio, and locate the Prompt field within any AI action (e.g. Ask Knowledge Base; GPT Task, AI Assistant).

- Enter a clear and concise prompt, based on what you'd like to achieve with a specific Bot, as below.

Configuring AI Settings - Recap

- Access AI Settings: Go to the gears icon for the AI Settings under the Knowledge Bases tab.

- Adjust Maximum Number of Tokens: Set the desired token limit based on the complexity and length of responses you need.

- Set Temperature: Choose a temperature value that balances creativity and consistency.

- Define Chunks: Determine the appropriate chunk size to optimize performance.

- Input System Context: Provide relevant background information or guidelines to shape the AI's behavior.

Bear in mind, whilst these are overall AI settings, you can still configure each Bot's AI settings within the Design Studio according to your specific needs.

Best Practices

- Experiment with Settings: Adjust the settings incrementally and test the responses to find the optimal configuration for your use case.

- Monitor Performance: Regularly review the AI's performance and make adjustments as needed to maintain the quality of responses.

- Keep Context Relevant: Ensure that the system context and prompt are relevant to the specific Knowledge Base and user interactions.

Conclusion

Configuring the AI settings for your Knowledge Base on Tiledesk allows you to fine-tune the chatbot's behavior and response quality. By understanding and adjusting the maximum number of tokens, temperature, chunks, system context, and prompt, you can enhance the effectiveness and efficiency of your AI-powered chatbot.

Have any feedback for us? Send it to info@tiledesk.com

.png)